-

Chinese startup DeepSeek triggered panic among investors in top AI companies like Nvidia.

-

DeepSeek claims to have built AI that rivals OpenAI’s o1 but with less compute.

-

That could mean lower demand for AI chips, but some analysts and AI leaders don’t agree.

A week after DeepSeek launched an industry-shaking AI model on Inauguration Day, investors have decided what it all means: a market-moving reassessment of AI’s multi-trillion dollar run.

DeepSeek, a spinout from a Chinese hedge fund, appears to have rivaled the capabilities of top AI models but by using fewer, less-advanced chips than what their American counterparts have spent billions of dollars on in capital expenditure.

On Monday, that sparked panic among investors who thought that more efficient AI would mean lower demand for the advanced chips needed to power models like OpenAI’s ChatGPT or Google’s Gemini.

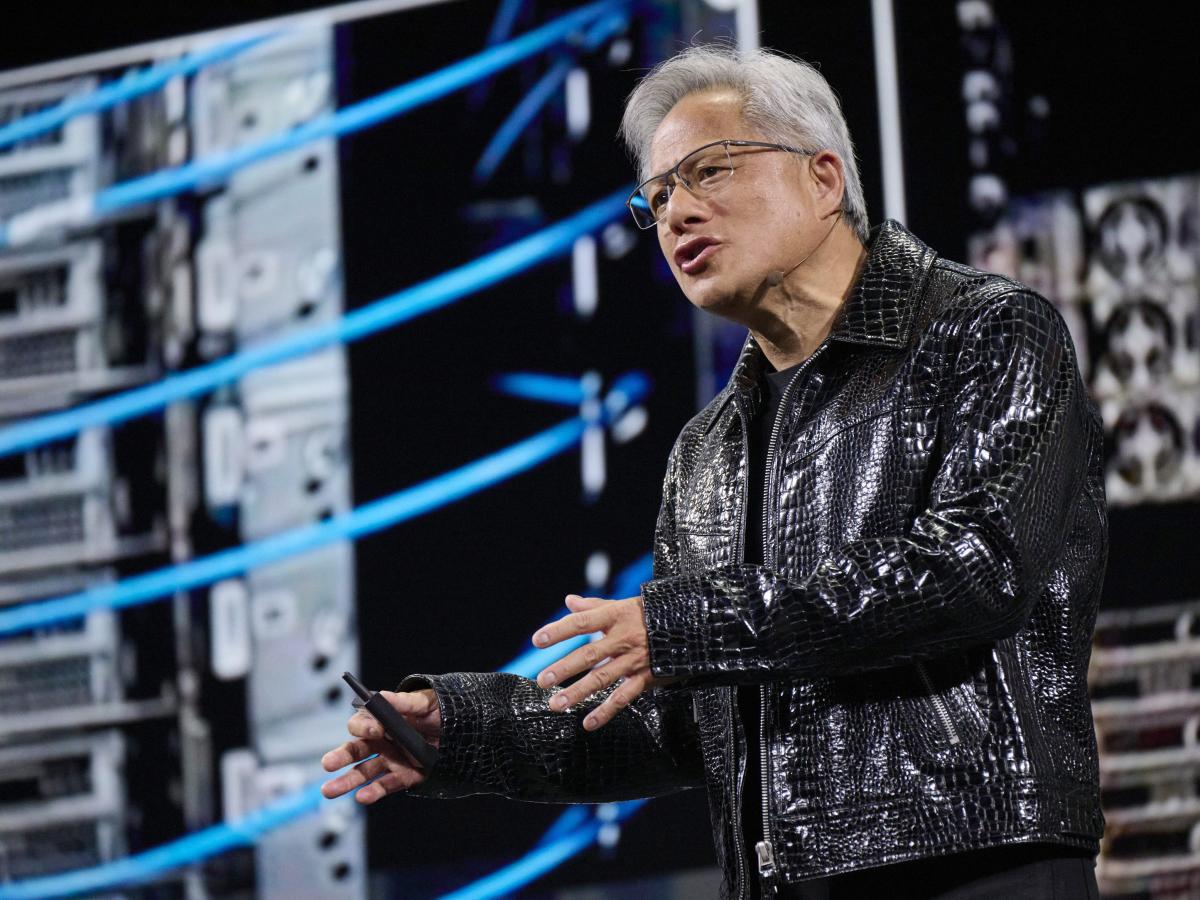

It’s why the sell-off was felt most by key companies in the AI supply chain. Nvidia, the chip giant that has added around $2.7 trillion to its market capitalization since the start of the generative AI boom, fell by as much as 18% on Monday. It suffered the largest US stock market rout in history, with $589 billion wiped off its value.

Others, like ASML, AMD, ARM, and a string of Japanese chipmakers tied to the chip-fueled AI industry, also took a hard fall after investors reckoned with the idea that a frontier-level AI model, like OpenAI’s o1, could be emulated with far less computing power.

While DeepSeek has called into question trillions of dollars in AI infrastructure spending, not everyone is convinced by the extent of the market’s movements — and it’s largely down to compute.

Hamish Low, an analyst at research firm Enders Analysis, told Business Insider that the reaction to the chip stock sell-off seems “quite overblown” as “being able to use compute much more efficiently,” a key claim of DeepSeek’s R1 release, “is by no means bad for compute demand.”

Several tech leaders, such as Microsoft CEO Satya Nadella, have taken to social media to make a similar point by citing the Jevons Paradox, the idea that as the cost of using a resource falls, demand will go up — not down.

As Nadella put it on X: “Jevons paradox strikes again! As AI gets more efficient and accessible, we will see its use skyrocket, turning it into a commodity we just can’t get enough of.”

Or, as former Intel CEO Pat Gelsinger put it in an X post on Monday, “Computing obeys the gas law.”

He added, “Making it dramatically cheaper will expand the market for it. The markets are getting it wrong, this will make AI much more broadly deployed.”

That suggests AI leaders want more efficiency alongside more computing power.

Ethan Mollick, a Wharton professor who studies AI, echoed this point. “Everyone in the space is compute constrained,” he wrote in an X post on Monday. “More efficient models mean those with compute will still be able to use it to serve more customers and products at lower prices & power impact.”

Similarly, Bernstein analysts wrote in a Monday investor note that their “initial reaction does not include panic.” The analysts, also citing the Jevons paradox, said that “any new compute capacity unlocked is far more likely to get absorbed due to usage and demand increase vs impacting long-term spending outlook at this point.”

Meanwhile, Dan Ives, a Wedbush analyst, used a note to remind investors of the bull case for Nvidia. He wrote that while launching a competitive model for consumers was one thing, Nvidia’s “broader AI infrastructure” involving robotics, for instance, “is a whole other ballgame.”

AI model developers have also been very clear about their intent to buy more AI hardware in the near future. Last week, both OpenAI and Meta announced massive plans to drastically increase their investment in AI chips and relevant infrastructure.

The ChatGPT maker announced a $500 billion initiative called Stargate to that end, while Meta CEO Mark Zuckerberg said his company was increasing its capital expenditure on AI this year to $65 billion.

Taken together, these initiatives signal a serious willingness from top AI players in Silicon Valley to continue spending on the products sold by the companies on the negative end of the market rout triggered by DeepSeek.

However, for other industry watchers, there remains a sobering rationale behind Monday’s market sell-off.

Javier Correonero, an equity analyst at Morningstar, told BI that investors will be conscious that if DeepSeek’s claims hold true, then there is reason to question if Big Tech firms like OpenAI, Meta, and others need to spend billions of dollars on securing extra chips.

“In my view, in the short-medium term, this could be bearish because maybe now the Big Tech firms that are doing all the capex will start focusing more on optimizing all their existing AI infrastructure rather than keep on acquiring more,” he said.

Enders Analysis’ Low made a similar point, telling BI that “DeepSeek is maybe just acting as a trigger point here for much broader investor unease around the returns on Big Tech AI capex and Nvidia’s continued rise.”

In the meantime, investors will continue to reel from the fallout of Monday’s market rout while Silicon Valley leaders unpack how DeepSeek achieved so much with seemingly so little.

Read the original article on Business Insider

Source link